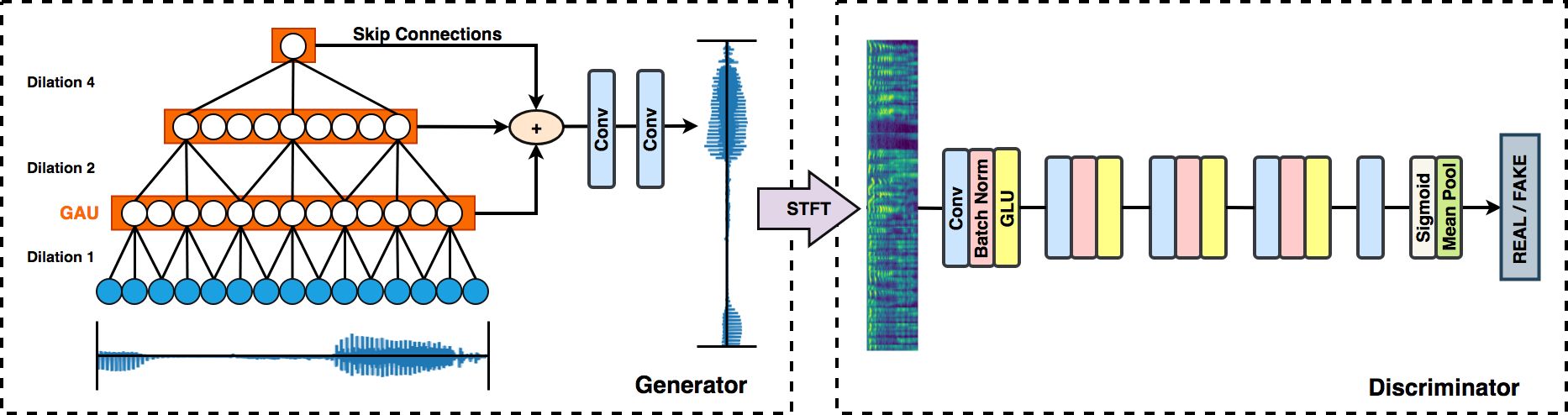

We introduce a data-driven method to enhance speech recordings made in a specific environment. The method handles denoising, de-reverberation, and equalization matching due recording nonlinearities in a unified framework. It relies on a new perceptual loss function that combines adversarial loss with spectrogram features. We show that the method offers an improvement over state of the art baseline methods in both subjective and objective evaluations.